Utilize an extensible, end-to-end platform for governance, analytics, and AI that runs on Red Hat OpenShift on IBM Cloud to gather, organize, and analyze your data to provide insightful information. You can quickly and effectively put your data to work by finding and accessing reliable data with the help of IBM Cloud Pak for Data. Make data-driven decisions and operationalize AI across your whole organization with trust and transparency. Here, Yeuesports explain what is IBM cloud pak for data and related information.

What is IBM Cloud Pak For Data?

You can utilize your data fast and effectively with the help of IBM Cloud Pak for Data, a cloud-native solution.

Your company has a lot of data. You must make use of your data to produce insightful discoveries that can aid in problem prevention and goal achievement.

But if you can’t access it or trust it, your data is useless. By allowing you to connect to your data, manage it, locate it, and use it for analysis, Cloud Pak for Data enables you to do both. Additionally, with Cloud Pak for Data, all of your data users may work together from a single, unified interface that supports a variety of services that are intended to complement one another.

By giving customers the option to look for already-existing data or request access to data, Cloud Pak for Data promotes productivity. Users can spend less time looking for data and more time using it successfully with the help of contemporary tools that enable analytics and reduce barriers to collaboration.

Additionally, with understanding what is IBM cloud pak for data, your IT department won’t have to setup numerous programs on various systems before attempting to connect them.

Run anywhere

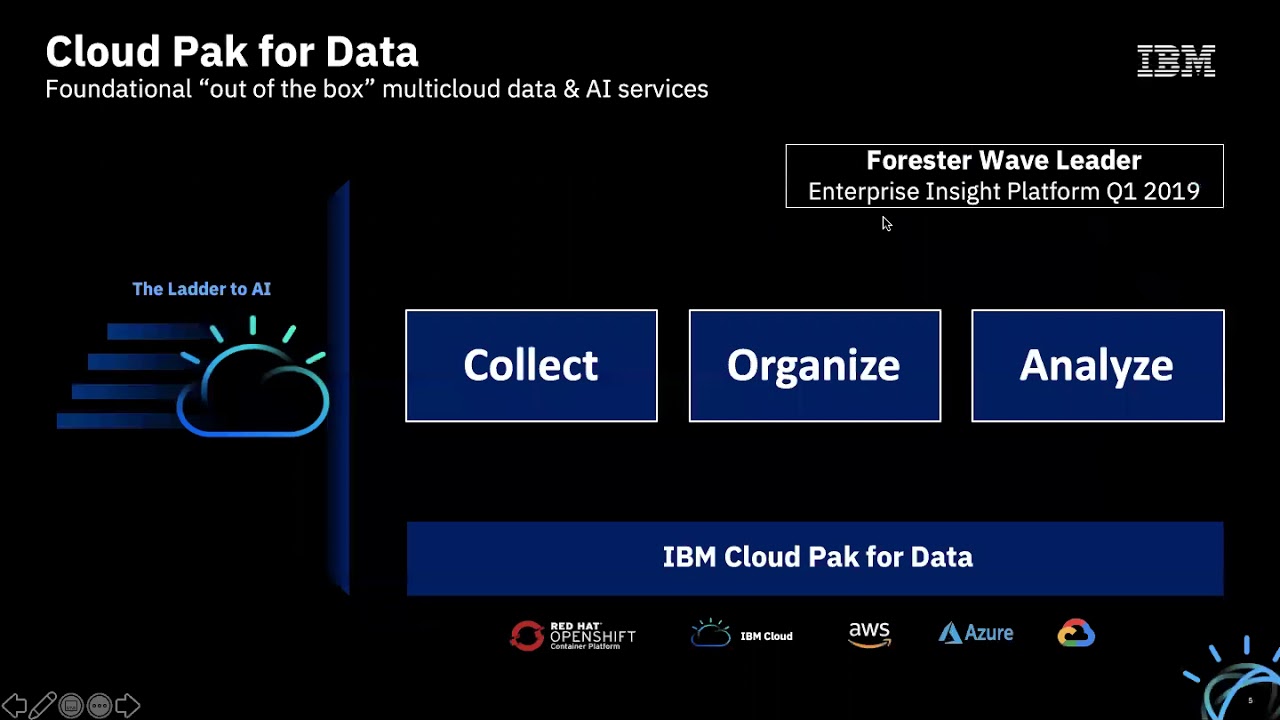

IBM Cloud Pak For Data can run on the Red Hat OpenShift cluster platform, in the local network or in the cloud.

In the cloud: If you deploy OpenShift on IBM Cloud, AWS, Microsoft Azure, or Google Cloud, you can deploy Cloud Pak For Data on the cluster.

On a private system: If you want to keep your deployment behind a firewall? You can run IBM Cloud Pak For Data on your system’s cluster.

If most of your business data is behind a firewall, you should put applications that access your data behind a firewall to prevent problems related to accidentally sharing your data.

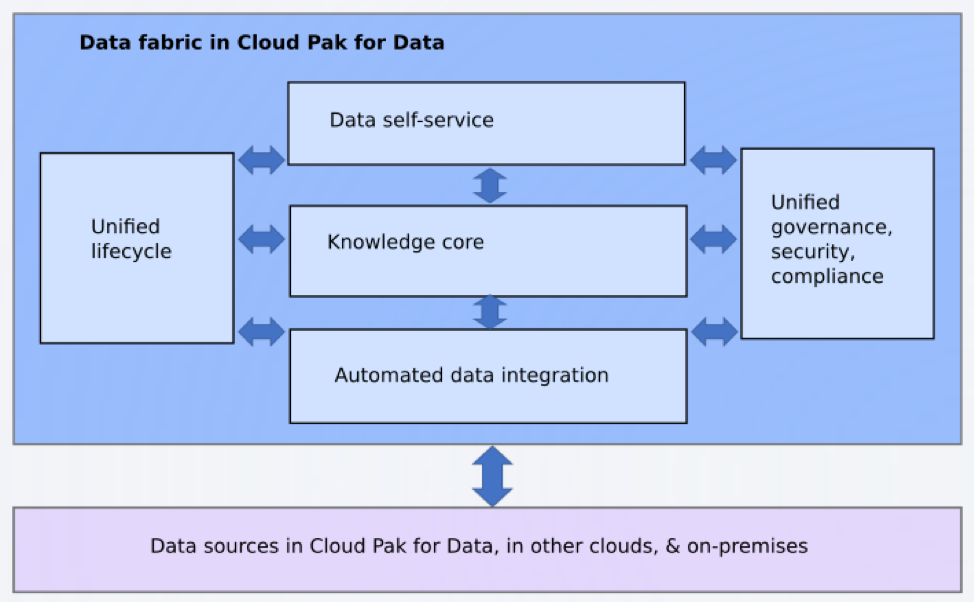

IBM Cloud Pak for Data’s Data Fabric

A data fabric is a type of architectural design used to manage extensively dispersed and unrelated data. A data fabric facilitates the separation of data storage, data processing, and data use because it was created for hybrid and multi-cloud data settings. No matter where the data is kept, processed, or used, you can elevate it into a corporate asset that is regulated globally thanks to the capabilities of the intelligent knowledge catalog. To enable you to supply business-ready data for your applications, services, and users, catalog assets are automatically allocated metadata that explains logical links between data sources and enhances them with semantics.

Your company may quicken data analysis for better, quicker insights thanks to the data fabric architecture offered by Cloud Pak for Data.

The Cloud Pak for Data data fabric architecture’s characteristics allow you to:

- Without relocating data, simplify and automate access to data from several clouds and on-premises data sources.

- Ensure the use of any data is always protected, irrespective of the source.

- Give company users a self-service data searching and utilizing experience.

- Utilize AI-powered tools to orchestrate and automate the data lifecycle.

The connectivity between the platform and pre-existing data sources is shown in the accompanying graphic, along with the data fabric’s five key capabilities.

Knowledge core based on metadata

Data stewards add metadata to data so that it may be searched for more effectively using semantics. They use automated discovery and classification to curate data into catalogs. By developing and allocating unique governance artifacts, such as business vocabularies, they can further enhance data assets. Additionally, they can import ready to use metadata collections from Knowledge Accelerators that are industry specific.

Self-service data access in catalogs

Data catalogs that contain data from across the enterprise are where data scientists and other business users may discover the data they require. They can browse for data, check the highly rated assets of their peers, or use AI-powered semantic search and recommendations that take asset metadata into account. They work together to prepare, examine, and model the data by copying data assets from a catalog into a project.

Automatic integration of data

Other users and data engineers prepare your data for usage. They can automate data preparation and enable access to the data in your current data architecture. They can virtualize and combine data to enable quicker and easier querying. To consistently publish updated data assets, they can automate the bulk intake of data, data cleansing, and complicated transformations. They have the ability to delegate data processing to the location of the data.

Unified data governance, security, and compliance

In order to automatically enforce universal data privacy across the platform, data stewards can develop data protection policies. Data masking conceals sensitive information to protect data security, maintain data utility, and eliminate the need for duplicate copies of the data. Knowledge Accelerators provide ready-to-use compliance metadata that data stewards can import.

Unified lifecycle

Different types of data pipelines can be designed, constructed, tested, orchestrated, deployed to production, and monitored by users in a consistent manner. Data assets can be created or found by users, searched for across the platform, and moved between workspaces. Data transformations and other tasks can be orchestrated by users by setting up jobs that execute automatically.

Assistance with your data lifecycle

Your data are dynamic. Your machine learning models must also evolve. To make sure that your machine learning models provide you with useful insight, you must continuously test and fine-tune them as more data is uploaded to your on-premises and cloud data sources. To ensure that you are working with high-quality data, however, you can deploy data governance, integration, and preparation services on Cloud Pak for Data.

You’ve heard the saying, “Garbage in, garbage out.” Poor data will lead to meaningless outcomes. You can make sure that your data is prepared for analysis by getting your data scientists together with your data stewards and engineers.

In order for them to be managed and maintained similarly to other data assets in your company, you may also make sure that any analytics assets that data scientists produce, such as models, notebooks, and Shiny apps, are included in a data catalog.

As more data is brought to your ecosystem, you may continually get fresh, worthwhile insights using Cloud Pak for Data.

Final thought

The platform IBM Cloud Pak for Data aids in increasing productivity and lowering complexity. Create a data fabric that connects siloed data that is dispersed throughout a hybrid cloud environment.

What is IBM cloud pak for data? This solution provides a broad range of IBM and outside services covering the whole data lifecycle. Options for deployment include a fully managed version based on the IBM Cloud or an on-premises software version built on the Red Hat OpenShift container platform.

Read more: https://yeuesports.com/how-to-audit-aws-cloud-6-steps-you-need-to-follow/